Implementation Method (AI/ML Use Cases)

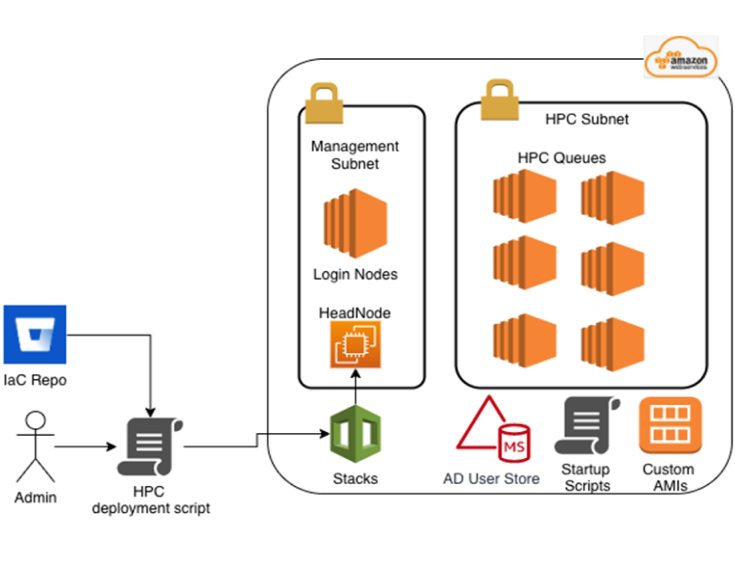

Use case 1: Build automated HPC (high performance computing) cluster on AWS public cloud to handle compute intensive data analytics workloads including RStudio Workbench. Enabling users in customer's clinical pharmacology team to execute data analytics, document generation using large scale computing model in pharmacology. The cluster is tightly coupled with enterprise identity access management policies to enable/disable user access based on AD groups.

Use case 2: Dynamically enable/disable internal team specific customization for specific packages (e.g.) torsten, stan, nonmem, etc. within every cluster before the use can execute. This enabled users to use the cluster for varied purposes instead of a pre-defined set of use cases.

Use case 3: Enable users in deep learning (MLOps) teams to access independent queues within existing cluster configuration to use GPU specific instances for executing deep learning models. Capability to run these GPU intensive workflows using specific version of libraries not restricted to PyTorch, Conda, Spack, Lmod, Julia, etc.

Use case 4: Customize RStudio package manager to enable freezing specific versions of R libraries to ensure compatibility across various teams.

Use case 5: Deployment and integration of RStudio with RSConnect to publish Shiny apps.